A Practical Guide to Measuring Customer Satisfaction

Johannes

Co-Founder

5 Minutes

July 22nd, 2025

Figuring out if your customers are happy isn't just a feel-good exercise. It's about translating their feelings into hard data you can actually use—data that drives loyalty, stops customers from walking away, and ultimately, grows your revenue.

Why Measuring Customer Satisfaction Is A Growth Engine

In a market packed with options, how your customers feel is a direct line to your future success. This isn't a "nice-to-have" metric; it's a critical health indicator for your business. A satisfied customer might stick around, but a genuinely happy one? They become your biggest fan and a walking advertisement.

Ignoring customer sentiment is like flying blind. You won't know why people are leaving until they're long gone, and you'll completely miss the boat on opportunities to make your product or service better. The companies that are winning today treat satisfaction not as a vanity metric, but as a leading indicator of revenue and retention.

The Business Impact of Customer Feedback

The link between customer experience and your bottom line is crystal clear. Businesses are pouring money into this area because the return is undeniable. The global customer service software market is already valued at around $14.9 billion and is projected to hit an incredible $68.19 billion by 2031. That's a massive industry-wide bet on the power of better customer interactions. You can dig deeper into these customer service benchmarks to see what this trend means for the market.

This investment is all based on one simple truth: happy customers spend more and are far less likely to churn. When you proactively measure satisfaction, you can:

- Reduce Churn: Spot unhappy customers before they decide to leave.

- Increase Loyalty: Build real relationships by showing you're listening.

- Drive Revenue: Satisfied customers are your best bet for repurchases and upgrades.

- Improve Products: Get direct, honest feedback to guide your development roadmap.

“Satisfaction is often treated as a win. A green metric. A notification that everything is going well. But in reality, it means that nothing went wrong. And that’s the problem.”

This quote nails it. "Satisfied" is just the baseline, not the finish line. A merely satisfied customer is neutral territory—they can easily be swayed by a competitor who offers a slightly better experience. Real, sustainable growth comes from creating memorable, positive moments that turn customers into advocates.

Core Metrics for Measuring Satisfaction

To get started, you need a clear framework. While you could track dozens of things, three metrics form the bedrock of any solid customer feedback program. Each one answers a different, vital question about your customer's experience.

Let's break down the "big three" so you know what they are, what they tell you, and when to pull each one out of your toolkit.

Core Customer Satisfaction Metrics At A Glance

| Metric | What It Measures | Best Use Case |

|---|---|---|

| CSAT (Customer Satisfaction Score) | Short-term happiness with a specific interaction or product. | Immediately after a support ticket is closed, a purchase is made, or a new feature is used. |

| NPS (Net Promoter Score) | Overall customer loyalty and willingness to recommend your brand. | Periodically (e.g., quarterly or semi-annually) to gauge brand health and identify promoters. |

| CES (Customer Effort Score) | The ease of a customer's experience when trying to get something done. | After a customer completes a specific task, like getting help from support or finding information online. |

Getting a handle on these metrics is your first step. It's how you move from guessing what customers want to knowing what they need.

Now, we'll get into the practical side of things: how to use these metrics, design surveys that people will actually answer, and turn all that data into meaningful change. We'll start by taking a closer look at each core metric.

Choosing The Right Customer Satisfaction Metrics

Picking the right way to measure customer satisfaction isn't about finding one single, perfect metric. It's really about asking the right questions at the right time. The big three—CSAT, NPS, and CES—each give you a different lens to look through. Don't think of them as competing options; they're complementary pieces of a complete feedback puzzle.

Your goal should be to get beyond just collecting scores. The real magic happens when you understand what those scores are telling you about your business and, more importantly, your relationship with your customers.

CSAT for In-The-Moment Feedback

Customer Satisfaction Score (CSAT) is your go-to for capturing immediate, transactional feedback. It's beautifully simple and answers the question: "How satisfied were you with this specific interaction?"

Picture this: a customer just finished a chat with your support team. The moment that chat window closes, a quick CSAT survey pops up. This gives you an instant read on that specific experience. Was the agent helpful? Was the problem solved fast? This is where CSAT really shines.

Use CSAT to get a pulse on key touchpoints like:

- Post-Purchase: Right after a customer checks out.

- After a Support Interaction: The second a support ticket is closed.

- Following a Feature Launch: When a user gives a new feature its first spin.

The beauty of CSAT is its immediacy. It acts as an early warning system, helping you spot and fix small problems with your processes before they grow into bigger, more damaging issues.

NPS for Long-Term Loyalty

While CSAT tells you about happiness right now, Net Promoter Score (NPS) zooms out to look at the bigger picture. It gauges overall brand loyalty by asking a completely different kind of question: "How likely are you to recommend our brand to a friend or colleague?"

NPS isn't tied to one transaction. It reflects the sum of all experiences a customer has had with your company. A strong NPS score is a fantastic leading indicator for growth because it means you have a healthy base of Promoters ready to spread the word for you.

Deploy NPS more strategically to check on your brand's overall health:

- Quarterly or Semi-Annually: This helps you track loyalty trends over time.

- After Key Milestones: For example, after a customer hits their 90-day mark or renews a subscription.

This metric is powerful because it slices your customer base into Promoters, Passives, and Detractors. That segmentation is gold for strategic planning—it shows you who your biggest fans are and which at-risk customers need some extra attention.

CES to Uncover Customer Friction

Customer Effort Score (CES) gets right to the heart of a major source of frustration: difficulty. It measures how easy you make it for customers to get things done by asking: "How much effort did you personally have to put forth to handle your request?"

Study after study shows that reducing customer effort is a massive driver of loyalty. When people have to jump through hoops, their satisfaction craters, even if they eventually get what they wanted.

A low-effort experience is one of the most reliable predictors of customer retention. If you make things easy for your customers, they are far more likely to stick around.

CES is incredibly useful after specific tasks:

- Resolving a support issue: Did the customer have to repeat their problem to three different people?

- Finding information: How hard was it to find an answer in your knowledge base?

- Making a return: Was the return process a walk in the park or a confusing nightmare?

By directly measuring effort, you can find and smooth out the friction points in your customer journey. Just remember, the insights you get are only as good as the survey you send. Crafting effective questions is key, and you can get some great tips in our guide to boosting survey response rates.

Combining Metrics for a Complete View

If you only rely on one metric, you're going to have blind spots. A customer might be happy with their latest purchase (high CSAT) but find your brand difficult to deal with overall (low NPS). This is exactly why using multiple metrics is so critical.

Recent industry data backs this up. A study from the Qualtrics XM Institute found that while customer satisfaction levels are pretty stable, other key loyalty indicators like trust and intent to repurchase are actually dropping. This proves that "satisfaction" alone doesn't tell the whole story. You can explore the full 2025 global study findings to see this for yourself.

Think of your metrics like a car's dashboard. CSAT and CES are like checking your tire pressure and oil levels—they monitor specific, vital systems. NPS is like checking the overall health of the engine. You need all three to get a complete, accurate picture of your performance and ensure you're not just creating one-off happy moments, but building real, lasting loyalty.

Designing Surveys That People Actually Answer

Even the most brilliant customer satisfaction metrics are worthless if your surveys get ignored. A truly great feedback program is built on surveys that feel less like a chore and more like a quick, valued conversation. The goal isn't just high response rates—it's getting honest, thoughtful answers.

So, let's ditch the long, templated questionnaires from the dark ages. Effective survey design today is all about respecting your customer's time, being crystal clear about what you're asking, and making the whole process completely effortless.

Keep It Short and Focused

The number one killer of response rates? Survey fatigue. It's that feeling a customer gets when they see a progress bar that barely budges or a never-ending scroll of questions. They'll just bail.

The golden rule for survey length is simple: make it as short as humanly possible.

A transactional survey, like a quick CSAT poll after a support ticket is closed, should be almost invisible—one or two questions, max. A bigger-picture relational survey, like a quarterly NPS check-in, can be a little longer. Even then, you should aim to keep it under five minutes. Showing you respect their time is the first step to earning their feedback.

Writing Unbiased and Clear Questions

How you word a question can completely warp the answers you get. You have to be careful. Leading questions, confusing jargon, or double-barreled questions (asking two things at once) will only frustrate your users and give you messy, unreliable data.

- Avoid Leading Questions: Instead of asking, "How amazing was our new feature?" try a more neutral, "How would you rate your experience with our new feature?" The first one practically begs for a positive review, while the second one invites an honest opinion.

- Use Simple Language: Drop the internal acronyms and technical speak. A question like, "Did our API's idempotency key handling meet your expectations?" will only work for a very specific, technical audience. For everyone else, stick to plain, direct language.

- Ask One Thing at a Time: It's a classic mistake. "Was our website easy to navigate and did you find what you were looking for?" This is actually two questions in one. Split them up to get clean, actionable data on both website navigation and information discovery.

Your questions should be a clear window, not a foggy mirror. The user should instantly understand what you're asking without having to decipher your intent. This clarity is fundamental to collecting accurate satisfaction data.

Thinking through your question design is a huge part of this process. For more ideas on how to frame questions for the best results, check out our deep dive into crafting effective customer experience surveys.

The Power of the Open-Ended Question

While multiple-choice and rating scales give you the "what," open-ended questions deliver the "why." They are your direct pipeline to the rich, qualitative goldmine hiding behind the scores.

A single, well-placed question like, "What's one thing we could do to improve your experience?" can reveal more actionable insights than a dozen numerical ratings. This is where you'll find the specific friction points, brilliant feature ideas, and genuine praise you can share with your team.

Just don't overdo it. One or two optional open-ended questions are plenty. Forcing someone to write an essay will send your completion rates plummeting. But simply providing the space for them to share their thoughts is invaluable.

Design for Mobile First

Here’s a simple truth: a huge chunk of your customers will open your survey on their phone. If your survey isn't built for a small screen—with big tap targets, readable fonts, and minimal scrolling—you're basically telling them not to bother.

Every single survey you create needs a mobile-first mindset.

Test it on your own phone before you hit send. Can you easily tap the buttons? Does the text wrap correctly? Is the experience fast and smooth? A clunky mobile survey signals that you don't really value their feedback. A seamless one shows you respect their time enough to make it easy.

Deploying Surveys To Capture In-The-Moment Feedback

A well-designed survey is only half the battle. The other half is getting it in front of the right customer at exactly the right moment. Choosing the right survey distribution methods and timing can make or break your entire feedback program.

Effective deployment is all about precision. You need to capture insights when they are most fresh and relevant.

This is where a tool like Formbricks really shines. Instead of just blasting out surveys via email and hoping for the best, you can trigger them based on specific user actions right inside your product. This “in-the-moment” approach dramatically increases the quality of your feedback because the experience is still fresh in the customer's mind.

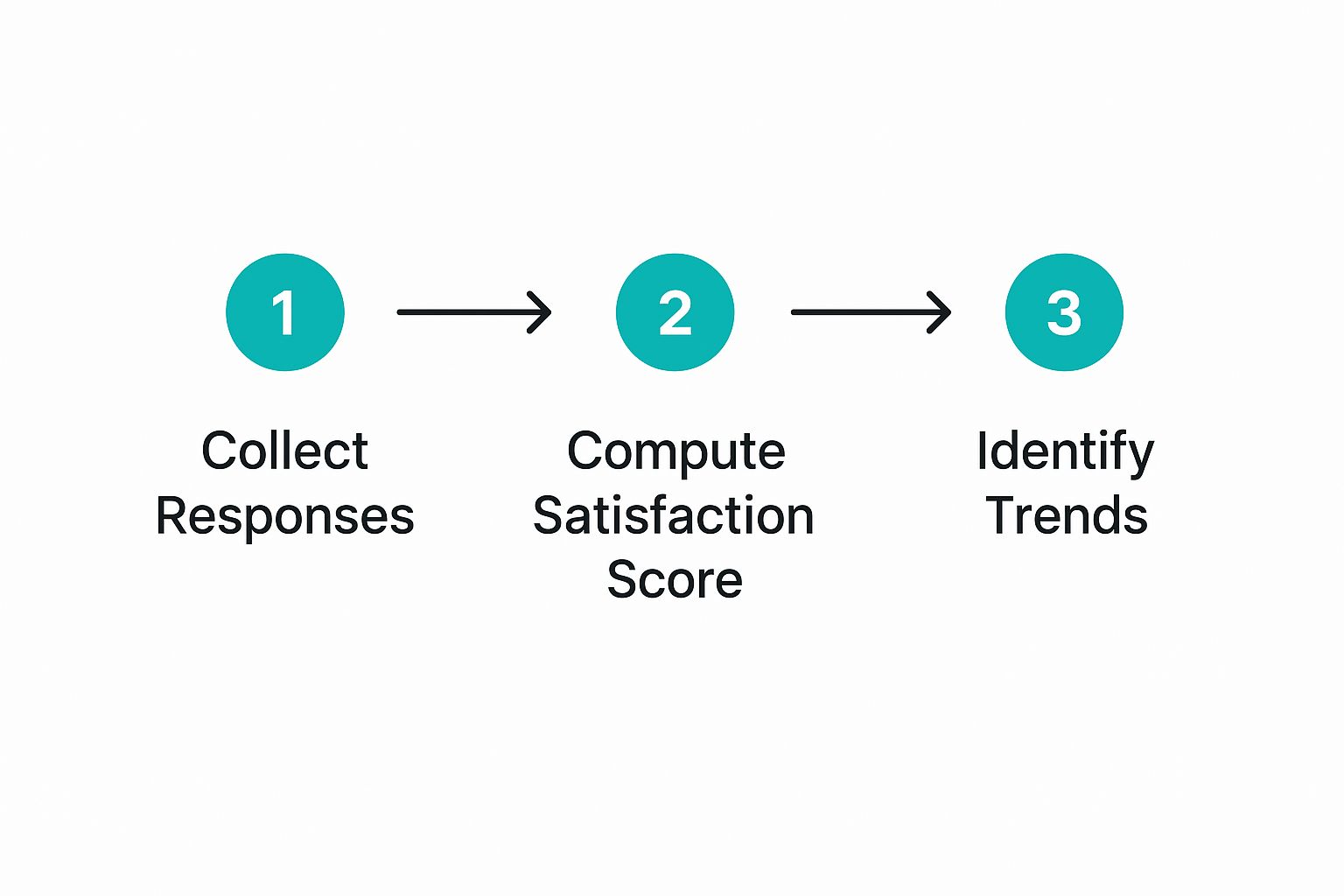

Setting Up Your First In-Product Survey

Getting your first survey live is simpler than you might think. With modern tools, you don't need to be a developer to get started. You can lean on pre-built templates for common use cases like CSAT, NPS, or even churn prevention.

This is what you'll see in Formbricks—a whole library of ready-to-go survey templates. For comprehensive frameworks that combine CSAT, NPS, and other metrics into a complete VoC program, check out our voice of customer templates.

Starting with a template saves a ton of time and makes sure you're asking proven, effective questions right from day one.

But the real magic is in the customization. You can easily tweak the look and feel—colors, logos, and fonts—to perfectly match your brand. This small step makes the survey feel like a natural part of your app, not some jarring third-party popup. A survey that feels integrated builds trust and, you guessed it, gets higher response rates.

The Art of Strategic Survey Triggers

The most critical part of measuring customer satisfaction is deciding when and where to ask for feedback. The goal is to make the survey feel like a logical next step in the user's journey, not an annoying interruption.

Here are a few high-impact moments to trigger a survey:

- After a key action is completed: Did a user just upgrade their plan, export a report for the first time, or invite a new team member? These are golden opportunities to gauge satisfaction with a core feature.

- Following a support interaction: The best time to ask about support quality is immediately after a ticket is resolved. A simple CSAT survey will tell you if your team is hitting the mark.

- When a user tries a new feature: Launching something new? Trigger a short survey for users who have engaged with it at least once. This gives you immediate, actionable feedback to iterate on.

- On an exit-intent: If a user is about to leave a key page, like your pricing or checkout page, an exit-intent survey can help you understand what's holding them back.

By triggering surveys based on specific user behavior, you move from generic feedback to highly contextual insights. You're not just asking, "Are you happy?" You're asking, "How was that specific thing you just did?"—which is a much more powerful question.

Avoiding Survey Fatigue With Smart Targeting

Nothing annoys users more than being constantly bombarded with surveys. This is why intelligent targeting and frequency management are non-negotiable. An effective survey platform gives you granular control over who sees a survey and how often.

You can set rules to avoid over-surveying your audience:

- Recontact Windows: Set a cool-down period (e.g., 30 or 60 days) to prevent the same user from seeing another survey too soon.

- Segment-Based Targeting: Show surveys only to specific user segments. For example, you might target users on a "Pro Plan" who have been active in the last 7 days.

- Trigger Limits: Cap how many times a survey is shown to a single user. You could show a feature feedback survey a maximum of three times, for instance.

This level of control is crucial for maintaining a healthy relationship with your users. The objective is to gather insights to improve their experience, not to hurt that experience in the process. By being thoughtful and strategic with your deployment, you ensure your feedback program is a welcome conversation, not an unwelcome pest.

Right, so you've collected a bunch of customer satisfaction scores. What now? Getting those raw numbers—your NPS, CSAT, or CES—is really just the starting line. They're indicators, sure, but they don't actually tell you what to do next.

The real magic happens when you apply customer experience analytics to turn that stream of data from just noise into a clear, actionable game plan. Because let's be honest, data without action is just an expensive hobby. To make any of this worthwhile, you have to dig in and understand the context behind the scores. That's where you'll find the specific pain points and brilliant opportunities that lead to real, meaningful change.

From Scores To Stories With Segmentation

A single, aggregate score can be dangerously misleading. An overall CSAT of 85% might look great on a dashboard, but it could be hiding a serious problem that's frustrating your most valuable customers. The first thing I always do is break down the data through segmentation.

Segmentation is just a fancy word for slicing your feedback by different user attributes to see what's really going on. Think of it like using a prism to split white light into a rainbow—suddenly, you can see all the individual colors that were invisible before.

You can segment your feedback by almost anything, but here are a few places I've found to be incredibly revealing:

- User Persona: Are your "power users" way happier than your "new trial users"? That might point to an onboarding issue.

- Subscription Plan: If customers on your "Enterprise" plan are consistently less satisfied, that’s a huge red flag you need to jump on immediately.

- Customer Journey Stage: Is satisfaction sky-high during onboarding but takes a nosedive after 90 days? You could have a problem with delivering long-term value.

- Product Area: Are people raving about your reporting features but getting stuck on integrations? Now you know where to focus your product roadmap.

Comparing segments like this takes you from a vague "some customers are unhappy" to a specific, solvable problem like, "New users on our free plan are struggling with the initial project setup." And that gives you a concrete place to start.

Uncovering The "Why" With Qualitative Analysis

While scores tell you what is happening, it's the open-ended comments that tell you why. This qualitative feedback is an absolute goldmine. I know, analyzing a wall of text comments can feel like a chore, but a few simple techniques make it totally manageable.

I always start by using tags or categories to get organized. As you read through the comments, just apply simple tags like "bug," "feature request," "pricing," or "slow performance." Most modern tools, including Formbricks, have features to help you do this, so you can quickly see which themes are popping up most often.

A classic trap is to only focus on the negative stuff. Don't forget to tag and analyze the positive feedback, too. Knowing what customers absolutely love about your product is just as important as knowing what they hate. It tells you what to protect and double down on.

Next, you can layer on sentiment analysis. This process automatically flags comments as positive, negative, or neutral. It’s a great way to get a quick, high-level read on the overall mood and spot trends faster. For example, you might see a sudden spike in negative sentiment right after a new feature release, which tells you to dig into those specific comments right away.

If you want to go deeper on this, our guide on analyzing customer feedback has a bunch of practical steps to get you started.

Closing The Loop To Drive Change

This is the final, and in my opinion, most important piece of the puzzle: creating a closed-loop feedback system. All this means is making sure the insights you gather actually get to the teams who can fix things—and that your customers know you listened.

A closed-loop system is all about accountability. When a customer takes the time to give you feedback, the absolute worst thing you can do is let it vanish into a black hole.

Here’s a simple framework that works:

- Route Insights: Automatically pipe product-related feedback into the Product team's Slack channel. Send bug reports straight into your engineering team's project management tool. Funnel comments about pricing over to Marketing and Sales.

- Take Action: Give teams the power to use this feedback to make actual changes. That could mean fixing a bug, building a requested feature, or just clarifying some confusing help documentation.

- Communicate Back: This is the part people forget. When you fix an issue a customer reported, tell them! A simple, personal email can turn a frustrating experience into a moment that builds incredible loyalty.

This process has never been more critical. Forrester's research shows a worrying decline in customer loyalty worldwide. Brands that don't act on feedback are going to get left behind. By closing the loop, you prove to customers that their voice matters, and you build the trust that keeps them around for the long haul.

Common Questions About Measuring Customer Satisfaction

Even with the perfect strategy, a few practical questions always pop up once you get into the nitty-gritty of measuring customer satisfaction. Let's tackle some of the most common ones I hear from teams. Getting these details right is how you move from just collecting data to gathering feedback that truly makes a difference.

How Often Should I Measure Customer Satisfaction?

This is all about striking the right balance. You need enough data to see trends, but you don't want to bombard your customers until they develop "survey fatigue." The secret isn't a magic number; it's matching your survey's timing to its purpose.

I like to think of it in two different rhythms:

- Transactional Feedback (CSAT & CES): These need to be immediate. Trigger them right after a specific event, like a support ticket being closed or a purchase being finalized. The memory is fresh, and the feedback will be far more accurate and specific.

- Relational Feedback (NPS): This is your big-picture metric for loyalty. You want to measure this at a regular, predictable interval—maybe quarterly or semi-annually. This cadence allows you to track brand health over the long haul and see how customer perception evolves.

Ultimately, your goal is to find a rhythm that feels natural, not intrusive.

What Is a Good Survey Response Rate?

Every team I've ever worked with asks this, and the honest answer is: it depends. There's no universal "good" number. Response rates swing wildly based on your industry, the channel you use, and how engaged your customers are in the first place.

For context, internal surveys can sometimes pull in 30-40%, while external customer surveys typically land somewhere between 5% and 30%. One thing I've consistently seen is that in-app surveys almost always beat email surveys because you're catching people at the exact moment they're interacting with your product.

Don't get hung up on hitting an industry average. The real goal is to consistently improve your own response rate over time. Small tweaks to your survey's timing, wording, or personalization can make a huge difference.

My Satisfaction Scores Are Low. What Should I Do First?

First off, don't panic. I know it can feel like a punch to the gut, but low scores aren't bad news. They're a gift. You've just been handed a roadmap pointing directly to what you need to fix.

Take a deep breath and get methodical.

Your first move is to dive into the qualitative data. The numbers tell you what is happening, but the open-ended comments tell you why. This is where you'll find the root cause of the frustration.

Next, slice and dice the data. Are the low scores coming from a specific customer segment? Are they tied to a particular feature? Do they happen at a certain point in the customer journey? Pinpointing the source is half the battle.

Finally, prioritize and then communicate. You can't fix everything at once, so focus on the issues that cause the most pain for your most valuable customers. Once you have a plan, tell your customers you heard them and are working on it. Closing that feedback loop is a powerful way to start rebuilding trust and shows them their voice actually matters.

Ready to stop guessing and start understanding what your customers really think? With Formbricks, you can launch targeted, in-product surveys in minutes. Collect actionable feedback, analyze trends, and build a better product with our open-source experience management suite. Start for free on Formbricks.

Try Formbricks now